Creatives are concerned about AI taking over the arts. But these tools show us why we shouldn’t.

NeRFs, ChatGPT, DALL-E, Stable Diffusion. I’m sure you’ve been hearing these names nonstop in the last few months. If not, welcome back from under the rock you've been living under.

These tools have been leveraging AI and machine learning to create some spectacular results, be they pictures or entire essays. Whether that scares you, pisses you off, or inspires you, creatives have actually been using AI tools for some time. Perhaps even without knowing it.

Thanks to Ryan Connolly of Film Riot, we now have a solid list of these tools. Let’s go through them, see what they offer, and talk about why you shouldn’t worry about AI taking your job.

AI from Topaz Labs

You may have already heard of Topaz Labs if you’re a photographer who releases on denoising. Its Denoise AI plugin delivers impressive results and keeps your image sharp.

Topaz Video AI, on the other hand, takes video and does a whole lot more, including denoising, upscaling, deinterlacing, motion interpolation, and shake stabilization. This uses Topaz Labs’ neural networks and is all optimized for your local workstation. No need to wait in a queue on Discord.

Connolly used it to upscale a 320p video into something more usable. If you haven’t seen content in 320p, just know that it’s not something you want as your source.

Topaz Labs also has a dedicated upscaling tool for photos that can upscale and enhance images or photos by up to 600%, all while claiming to preserve image quality, texture, and detail. Connolly relied on this tool to upscale his AI-generated images, which are usually created at a lower resolution.

ColorLab AI

The next AI tool No Film School readers should be familiar with. We covered this color grading suite from ColorLab AI earlier this year and had a chance to play with a 2.0 build.

We were astonished by how machine learning was used to not only set a baseline across multiple sources from different camera brands but to also build our very own film emulation looks. You can also use reference images from your favorite film or photos and have the software match your footage to that look.

For creatives or colorists on a budget, this tool is helpful as an assistant (which Connolly also mentions) but can also be relied on to add finishing touches to get your project done faster.

The Adobe Motherload

Whether you’re a photographer working in Photoshop or a compositor working in After Effects, your work has already benefited from a little robot magic. Adobe Sensei, its propriety machine learning algorithm, is the foundation for everything from upscaling to rotoscoping.

There’s a tone to explore here, but here are some of Connolly’s favorites.

Content-aware fill, which has been my go-to in Photoshop, is now available in After Effects. Just create a bounding box over what you want to remove, and presto, it’s gone from your video. While you may need to do this every few seconds or frames, it’s a lot better than rotoscoping and then painting in the areas later.

Speaking of which, Rotobrush in After Effects also benefits from Adobe Sensei. While many may not have known, this tool relies heavily upon AI to get the perfect mask.

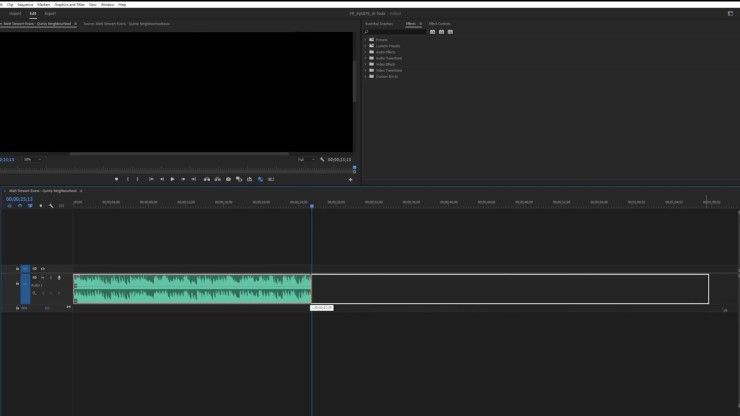

However, there are also some amazing tools in Premiere Pro. The scene edit detection tool utilizes Sensei to find and make the cuts for you, while Remix takes your audio and allows you to stretch it to the length you need. AI then “remixes” the audio to that length so creatives won’t need to sacrifice an important cut for the awkward timing of the music. While it’s not perfect, Connolly states that “it does a shockingly good job.” Anything else can just be sweetened by hand.

Finally, Adobe added AI-based transcription into Premiere Pro this year, which creatives can use to generate captions or scripts.

There’s a whole lot more to explore, so if you have a Creative Cloud subscription, be sure to take a deep dive and discover all the cool things Adobe Sensei supports in your workflow.

AI in DaVinci Resolve

In an attempt to fulfill its company name, Blackmagic Design has also implemented AI into its NLE suite to create some magic. The DaVinci Resolve neural engine powers things like the magic mask, which acts a bit like Rotobrush in After Effects but can work on the edit page or the color page.

Creatives can use it to select and refine what they want to mask and then track it. This mask can then be used to make detailed adjustments to color and exposure, add blur to the background or foreground, composite something into the scene, and even create a depth map. The latter is great for adding depth of field, haze, or VFX elements that rely on a depth map.

One of our favorite tools that Connolly only briefly mentioned is the Face Refinement tool in Resolve. I used this on a project last year to both make some facial adjustments that an actor requested and also used it to elevate an eye light for an important scene.

Animation From Stills

If you’ve been around the YouTube sphere, you’ve probably stumbled across Joel Haver and this absurd comedy. To get his distinct look, Haver uses Photoshop and a tool called EBSynth (which is on Connolly’s list) to generate individual animated frames, without too much animating. Here is Haver’s workflow if you’re interested:

Connolly goes a bit more in-depth into the tech behind the EBSynth process, which can take one altered frame of your video, and generate matching frames for the rest of the batch. But there are some pitfalls to consider, as Haver mentioned in his workflow breakdown.,

Mix Your Own Audio

Finally, Connolly mentions Isotope Neutron and Voice.ai. The former is a great tool for mixing your audio, especially if you’re not a sound engineer, while the latter can take your voice or a piece of dialogue and transform it into a different voice altogether.

While Voice.ai might seem like a gimmick, it actually produces some incredible results that can really streamline the ADR process, especially if your actor is away on a different project.

AI Is Not All Doom and Gloom

In the past few months, creatives have been worried about their place in the artistic landscape, and rightly so. AI image generation has developed in leaps and bounds in a matter of weeks, and the results have been incredible. Even screenwriters are starting to worry about becoming obsolete ever since ChatGPT came onto the scene.

But AI tools are just that. Tools. They learn from the foundation that artists have already built. While they can deliver impressive results, they can only do so on the backs of creatives. What we do with these tools will always surpass the stuff they create.

When all is said and done, AI can only create content. Creatives tell stories. So go out and tell stories because we don’t need more content. We need more stories.

Your Comment

1 Comment

I use all those tools and they're great but if this article isn't talking about Midjourney v4 then it's just optimistic bullshit, sorry.

December 21, 2022 at 1:11AM